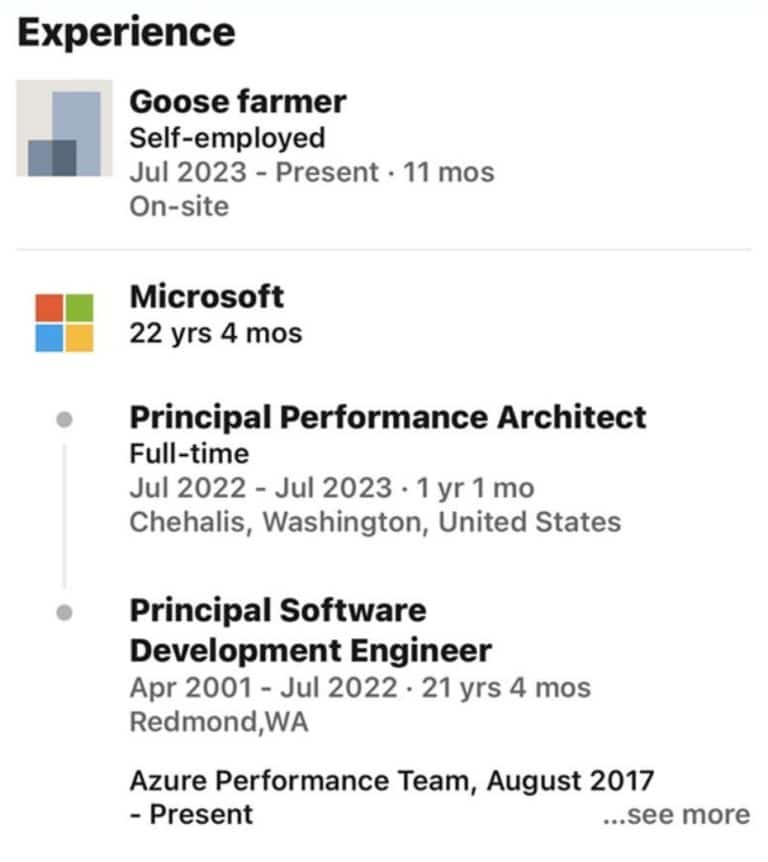

I came across this awesome picture a while ago and it really inspired me, in a big way.

It has been exhausting working in IT for so many years, doing so many different things, and still constantly feeling like I’m behind the technology curve. Learning new stuff started to feel sisyphean and pointless and in this industry that’s a sign of burnout or something worse. In truth it probably had a lot to do with the fatigue from being disengaged from my work. I lost my Ikigai :(

Around this time I also had to fly back to Canada to attend a wedding, see some friends, and retrieve some superficially useless but nevertheless prized possessions from my storage locker. Among these was an old gaming pc and other assorted hardware I felt compelled to repurpose. I figured it would be fun to host a couple of things for myself and some friends, but what happened next changed the landscape of self-hosting forever.

I was already fed up with the usurious, rent-seeking and predatory nature of various services so I decided to bite the bullet and consolidate everything into this machine. Nothing new here, but this one is special because it is mine.

It also gave me the freedom to play with some new tools, which is one of the best ways to gain understanding and broaden knowledge.

The hardware

Assembled from the dessicated remains of previous machines, this system is suprisingly performant and robust even after a year+ of continuous tinkering.

- CPU: Intel i7-3770K

- 20GB RAM. It was way more but some sticks died and troubleshooting random restarts and kernel panics took years off my life. I don’t want to talk about it.

- GPU: Radeon R9 380

- Storage: 300gb SSD for the OS + bootdisk, and 3 x 2TB + 1TB HDDs for data.

Storage

I chose to setup zfs because software raid is cool, and during my testing of swapping around drives and importing/exporting pools, it worked flawlessly.

A zfs pool is composed of one or more vdevs, and each vdev is composed of one or more physical disks. In any RAID mode other than striped (no parity, 1 dead drive destroys the entire pool) the maximum capacity of the vdev will scale down to the capacity of the smallest disk x number of disks. So in my case I would get 4TB total instead of 7TB in any real raid mode. But since the original idea was to recycle these parts and clean out storage I opted to live dangerously and just go for striped. If he dies, he dies. The only valuable data I backup is music and library metadata, which goes to my Qnap NAS on the LAN via Restic

$ restic -r --verbose sftp:admin@eldritch-lib:/share/generic-wizard-tower/restic-repo backup /music-dir

Everything else is just version-controlled Ansible playbooks, including secrets encrypted with ansible-vault, on Github. Eventually I will deploy a gitlab and Bitwarden instance, in which case the pool will definitely be rebuilt with disk failure in mind, but for now this is fine.

Why no virtualization? Why no Proxmox?

Much for the same reason that I haven’t bothered to deploy a kuberneetus cluster: its overkill for my purposes.

Although the CPU is old, it does support hardware virtualization, which means I can run a Windows VM in libvirt, which means I can run an SP-Tarkov server. Luckily that wasn’t necessary because clever gamers have managed to containerize it instead, and I was shocked to discover it actually works!

Anyway, if you want to verify whether your cpu supports hardware virtualization, execute cat /proc/cpuinfo | grep --color vmx and look for that vmx flag value. Additionally, it may or may not have to be enabled in the BIOS.

I opted for a combination of rootless Podman + systemd for container lifecycle management. Quadlets are also now a thing, so I see even less reason to go down the k8s path. I will almost certainly give Incus a try soon though.

The network topology

Over time, my descent into madness increased when I realized my ISP had NATed me, because port forwarding from my router stopped working. ipv4s are rare and valuable creatures I get it, but modern problems require modern, over-engineered solutions. I gained access to a VPS with a public ipv4 on a cloud provider that would serve as my secret tunnel into the tower.

secret-tunnel

This is the main entrypoint for connections from the internet, where I installed:

- HAProxy

- Wireguard

- Crowdsec-firewall-bouncer

The main purpose of this VPS is to 1. give me a static public ip, 2. route traffic for specific services to specific ports and 3. serve as VPN. Pretty much everything is routed to the wireguard tunnel ip of the tower. Of course, the wg-server is also used by my other devices when I’m adventuring abroad. Additionally, although all my external services are protected by Authelia, others such as the Finamp android app aren’t able to authenticate against authelia; it expects a simple URL where Jellyfin can be reached, without the ability to set an auth token in the request headers. The solution I opted for is configuring authelia to bypass auth for any clients in the wg subnet.

However, I still do need to monitor public endpoints behind authelia. The solution for this is to add a host header key in the Traefik router config for that particular service. More on that in the How I use Traefik article.

HAProxy

The reason I did not use HAproxy’s http mode is because I wanted to handle all the ssl offloading, as well as to pass through the source ip of the connection into Traefik. What makes this trick possible is the magic of the proxy protocol. The tower sees all.

~/projects/gwt/files/haproxy/haproxy.cfg.j2

...

frontend redirect-to-https

mode tcp

bind :::80 v4v6

http-request redirect scheme https unless { ssl_fc }

frontend frontend-https

mode tcp

bind :::443 v4v6

default_backend backend-gwt-https

backend backend-gwt-https

mode tcp

server upstream-gwt {{ common_wireguard_peer1_ipv4 }}:443 send-proxy-v2

...

Wireguard

More details and light drama here.

DNS

More details in How I use Traefik.

Crowdsec

Crowdsec is basically a WAF that blocks malicious connection attempts from ips on various crowd-sourced blocklists as well as detecting common attacks like port-scanning. Kind of like fail2ban, but with a couple more components. For the secret-tunnel I installed the firewall bouncer. The gwt is itself hosting the main crowdsec container that the bouncer is authenticated against.

What about ipv6?

It’s on my to-do list. A full ipv6 setup would make wireguard unnecessary, since I can assign the tower a globally unique address via my Fritz.box router, but I still need a way to handle ipv4 client connections (which is certainly possible with HAProxy).

When will the torment end?

Never. The tower is growing and so is this blog post.